The Brain of Prof. Le Cun

In a remarkable paper (Le Cun, 2022) Turing Award recipient Yann Le Cun proposes a new cognitive architecture for intelligent agents. The selection of questions this architecture seeks to address is drawn from cognitive psychology (animal and human learning abilities and world understanding). It also dramatizes a strong conviction in the universality of gradient-based learning. We argue from confronting it to well-known studies in experimental psychology and recent results in neuroscience that the new architecture is both misleading and truly inspiring.

On some ambiguities in its psychological foundation

In the paper, the need for a new architecture at this time-point in Artificial Intelligence (AI) and Machine Learning (ML) research is predicated on issues squarely relevant to cognitive psychology. Concepts such as ``human and animal learning abilities", ``understandings of the world" are contrasted with the current class of Deep Learning (DL) systems, including Large Language Models (LLM), which performance and–sometimes questioned–reliability often require huge training datasets, massive amounts of human supervision data and numerous reinforcement trials. It seems however that simply reading this rooting in psychology as a step back to the 1950s early vision of an AI inspired, if not copied, from the human mind would be misguided. Whether the proposed new cognitive architecture (NCA) is psychologically plausible is a distinct question though.

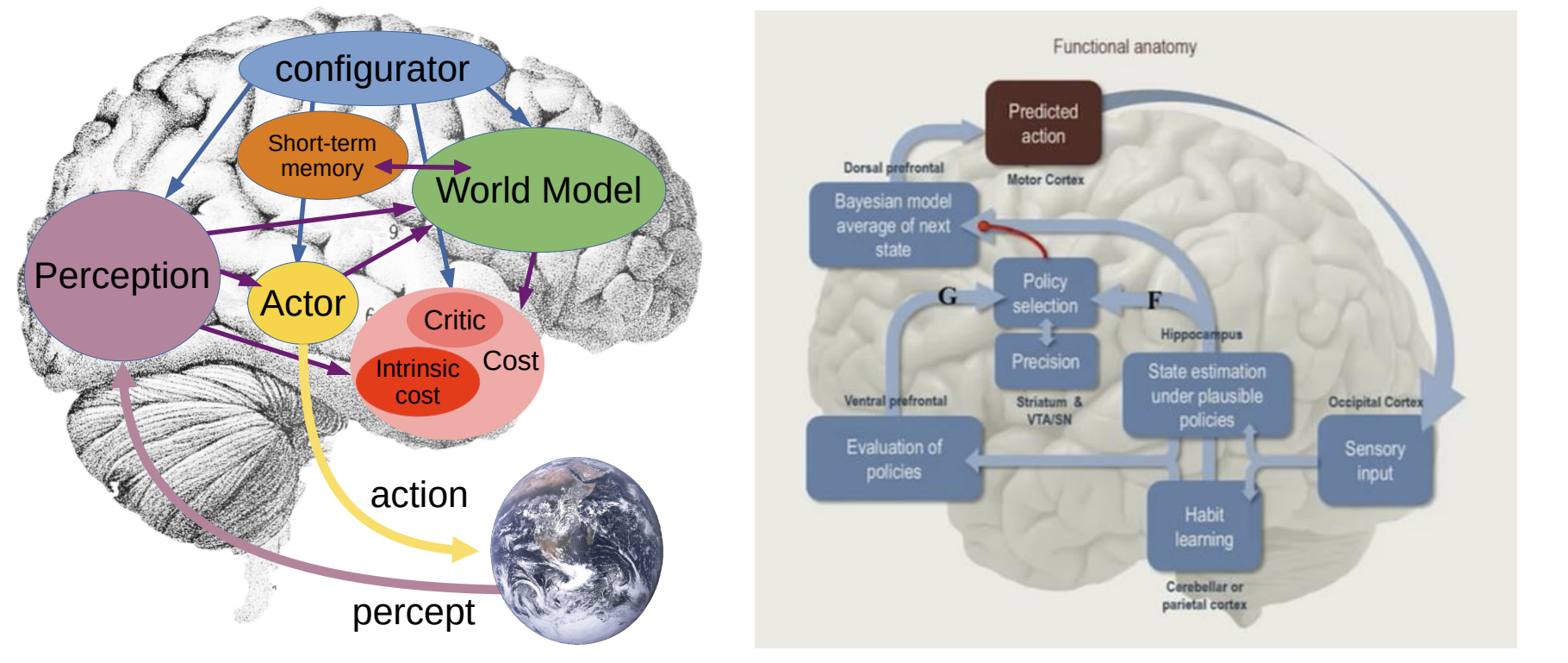

The Fig. 2 in the paper may contribute to the ambiguity (see Figure 1 in this paper). On the one hand, the neural net NCA is diagrammed with modules, the labels of which are strongly reminiscent of the earlier period of classical, or symbolic AI (``world model", ``working memory", ``reasoning, planning", ``critic"). But the schism between connectionism and symbolic AI, to adopt general terms, broke out in the discipline decades ago, almost at inception (Minsky and Papert, 1969, Smolensky, 1988). Paradoxically these same labels were then rather lavishly used to illuminate the strengths of the period iconic problem-solving systems in reasoning (Ernst and Newell, 1969, Newell, 1990, Anderson, 1995) and planning (Sacerdoti, 1977).

On the other hand, in the same Fig. 2, the modules diagram is overlaid on a depiction of the human brain as if hinting at neurophysiological plausibility, although only suggestive on which cortical areas would be thus designated. (A coarse-grained correspondence is offered in a later section of the paper.)

The modular diagram suggests that the ``autonomous machine intelligence" of the title aims at defining agents in the world that represent information about their environment (here picture of Earth viewed from space in Fig. 2, maybe the agent is a remote robot in space exploration) and act in that environment. The activity is viewed in accord with the way that information is represented in a modular way: having receptors, to pick up information about the agent's environment; having a ``computational domain" consisting of, or containing, representational ``mental states"; having a ``processor" which is the locus of changes to the computational domain; and having effectors, to act in and on the environment. Note that the notion of a ``body" is notoriously absent of the NCA at this point, so that apparently in line with conservative connectionism anthropological approaches to sense-making and interpretation found in situated cognition (Suchman, 1987) are not relevant here. Similarly the modular diagram of the NCA may evoke the view that cognition is computation on amodal symbols in a modular system, independent of the brain's modal systems for perception, action, and introspection, the central point rejected in grounded cognition (Barsalou, 2008). (However Le Cun is a signatory of a recent call to revisit modern neuroscience as a pool of new inspirations for AI after the current success of LLMs (Zador et al., 2023), self-fashioned as NeuroAI, so the hint is really a signal to the cognoscenti.)

Instead here the ``world model" plays two roles: it has content (information about the environment) and it is a causal factor in the agent's activity. In its causal responsibility it supports two paths from perception to action: a direct one where the perception-thought module is connected to the thought-action module, and an indirect one connecting the perception-thought module to the inference module (which handles proposal, internal simulation, evaluation and planning of actions) and the latter to the thought-action module. The two modes are presented as analogous to Kahneman's well-known System 1 and System 2 (Kahneman, 2011). There is however a whiff of the Strong Modularity Assumption to these perception-action episodes (Barwise, 1987). In the current version of the NCA it remains unclear how it hooks to the environment, which surely includes the autonomous intelligent agent. But, see above, NeuroAI calls to bring back the environment into the picture of future AI directions–which immediately switches reference to Jakob von Uexk�ll's Umwelt (Uexküll et al., 1913, Gibson, 2014, Fultot and Turvey, 2019, Feiten, 2020) towards a whole new brand of AI.

Still, although not explicitly stated, the NCA is predicated on the unitary theory of mind (Anderson, 1995): ``all the higher cognitive processes, such as memory, language, problem solving, imagery, deduction and induction are different manifestations of the same underlying system". But it is difficult to appreciate how the NCA is positioned between (i) seeing the world as existing externally to our minds like in the ``externalism" of (Putnam, 1981), or the ``objectivism" in (Lakoff, 1987), and (ii) viewing the brain as a physical device to which the world is a subset of its internal processes, respectively ``internalism" or ``experiential realism" (Rapaport, 1991). From the former viewpoint, the NCA and minds can have attitudes (knowledge, belief, desires, etc.) about the world whereas from the latter it toils under a form of solipsism.

The idea that human construct mental models of the world, and that they do so by using tacit mental processes, is, of course, not new. Indeed mental models were posited back in (Johnson-Laird, 1983) as a path to the scientific understanding of cognition, a methodological attempt at bringing together ideas and results from experimental psychology, linguistics and – the then mostly symbolic – AI. Johnson-Laird then, like Le Cun today, further traced the idea back to (Craik, 1943) where this understanding in these terms constitutes precisely what counts as an explanation. In 1943, Craik wrote:

By a model we thus mean any physical or chemical system which has a similar relation-structure to that of the process it imitates. […] My hypothesis then is that thought models, or parallels, reality–that its essential feature is not 'the mind', 'the self', 'sense-data', nor propositions but symbolism, and that this symbolism is largely of the same kind as that which is familiar to us in mechanical devices which aid thought and calculation.

\noindent so that it seems that his original notion of a mental model, with its essential symbolism, is quite different from the Optimal Control theory inspired, contemporary differentiable avatar at the core of the NCA model. This should not come as a surprise as the decades-long AI debate in which symbolism confronts connectionism is anything but new (Chauvet, 2018, Hunt, 1989). Additionally Mental Models theories themselves didn't develop unquestioned (Newell, 1990), (Baratgin et al., 2015), (Sloman, 2007).

On the Differentiable Mental Model Theory

A Note on mental models in Cognitive Science and Neuroscience

Following Johnson-Laird, Mental Models theory met with many successes in cognitive psychology research of the 1980s and 1990s (e.g. mental model theory of inference and theory of semantic theory) (Johnson-Laird, 1980). Earlier on, ``intuitive theories" (Heider and Simmel, 1944) based in experimental psychology were quickly adopted to explain people's competencies, and notably infants, about the physical world, biology and life, even about the conceptualization of the social world (Gerstenberg and Tenenbaum, 2017). In its applications to spatial and temporal deductions, and to propositional inferences and syllogisms (Johnson-Laird and Byrne, 1991) the Mental Model theory developed by navigating the same cognition domains of interest to symbolic AI, namely reasoning and planning (Johnson-Laird, 2010), still to this day notoriously identified as weak areas for LLMs (Valmeekam et al., 2022).

In neuroscience, functional neuroimaging of the brain mechanisms related to the acquisition and the predictive switching on of internal models for tools use (``intuitive theories") (Imamizu and Kawato, 2009) demonstrate that internal models are crucial for the execution of not only immediate actions but also higher-order cognitive functions, including assembly of attentional episodes (Duncan, 2013), optimization of behaviors toward long-term goals, robust separation of successive task steps (Duncan, 2010), social interactions based on prediction of others' actions and mental states, and language processing (Shulman et al., 1997). While this could comfort the neurophysiological plausibility of the NCA, the dynamics of coupling in the wide distribution of cortical areas involved is complex and difficult to unify under a single ``algorithm" (Cole and Schneider, 2007, Duncan et al., 2020, Buckner and DiNicola, 2019). Moreover the ``default network" in the brain is not a single network, as historically described, but instead comprises multiple interwoven networks; it has been shown to be negatively correlated with other networks in the brain such as attention networks (Broyd et al., 2009).

A Note on symbolic mental models

The NCA seems to sail a bit away from ``symbolic" mental models above. Historically the frontiers between the connectionist and the symbolic approaches to cognitive modeling have been disputed territories (Smolensky, 1988). Within conservative DL, only a few interrelated guiding principles drive the design of machine intelligence:

- Renounce anthropomorphism, by not setting an objective to copy or reproduce the human brain. Although this sounds contradictory with the early beginnings of connectionism, that artificial neural networks, old and new, are only loosely inspired from cerebral tissues however is a well-accepted background in DL studies. Furthermore, existence of a form of backpropagation in the brain is also subject to debate (Lillicrap et al., 2020). In consequence directly copying human cognitive processes or perusing purely symbolic instructions in the design are usually rejected as objectives (although they may be acceptable if interpreted indirectly as side-effects or emergent (Flusberg and McClelland, 2017, Smolensky, 1986)).

- Adopt strict empiricism. The competences of the agent should only result from its experience. The performance of an artificial neural network should depend only on its training, given its graph of links and vertices.

Within the Cost Module of the NCA, the Intrinsic Cost sub-module seems an acknowledged relaxation these constraints, introducing a hard-wired inductive bias. As detailed: ``The ultimate goal of the agent is minimize the intrinsic cost over the long run. This is where basic behavioral drives and intrinsic motivations reside." Whether a paraphrase of Asimov's ``Three Laws of Robotics" or not, the vocabulary used here is nonetheless explicitly referencing psychology: ``pain", ``pleasure", ``hunger", ``feeling 'good'", ``motivate agency or empathy"; it maybe prompted by the heated contemporary question of ``alignment" (Marcus and Davis, 2019). But it can only be used metaphorically here, if relying on the above guiding principles.

A Note on sensory information, inference and perception

In neurophysiology the concept of internal models was developed originally in motor neuroscience. As mentioned above, many intervening neuroscience studies suggested that it could be extended to explain the fundamental computational principles of higher-order cognitive functions in the brain, such as goal-directed behaviors, mirror systems, social interactions, communication, and languages (Cooper, 2010). The classification of models generally adopted in neurophysiology:

- Forward models transform intended actions or goals into the motor commands to reach those goals. A forward model is a representation of the future state of a system.

- Inverse models transform efference copies of motor command into the resultant trajectory or sensorimotor feedback. Inverse models ``invert the causal flow": given a future desired state, they generate the motor command that is required to bring about that state.

\noindent has its theoretical roots in Control Theory.

Optimal Control Theory, where the objective is ``to determine the control signals that will cause a process to satisfy the physical constraints and at the same time minimize, or maximize, some performance criterion" (Kirk, 2012), offers the mathematical toolkit for the development of these models. The rooting in Control Theory appears then crucial to the NCA model. This is particularly explicit in the statement of the third axiomatic universal differentiability principle. (And, on a lighter note, testifies to the impact of (Bryson and Ho, 1969) on Le Cun's work as he himself narrates in the autobiographical review of his research work (Le Cun, 2019).)

The differentiability in the proposed theory translates into the energy-based model nature of the NCA. The idea that the brain functions so as to minimize certain costs pervades theoretical neuroscience (Surace et al., 2020). However optimization methods to train neuromorphic models are often pooled from their artificial neural network analogs in ML, a field that had all along intense interactions with theoretical and computational neuroscience. Principles of optimal control theory, and particularly the method of stochastic steepest descent, or gradient descent, were for instance very successfully imported in ML (Schmidhüber, 1990), allowing supervised learning techniques to be employed for reinforcement learning–the nuts and bolts of experimental cognitive psychology. This success in ML is unfortunately not necessarily indicative of a success in neuroscience at large (Surace et al., 2020). In fact, specific studies of intrinsic neuronal plasticity, of quantal amplitude and release probability in binomial release model of a synapse, of synaptic plasticity and of models of spike trains, to mention a few examples, generally conclude that since the definition of the gradient and the notion of a steepest direction depend critically on the choice of a metric, the very large number of degrees of freedom on the choice of a biologically relevant metric may call the predictive power of such models into question.

Within the Differentiable Mental Model Theory, alternative models to the NCA have been proposed. The Free-Energy Principle (FEP) for adaptive systems (Friston, 2010) aims at a unified account of action, perception and learning. In this influential and controversial theory (Raja et al., 2021, Ramstead, 2022, Walsh et al., 2020), the axiomatic principle is one of homeostasis: any ``self organizing system that is at equilibrium with its environment must minimize its free energy. The principle is essentially a mathematical formulation of how adaptive systems (that is, biological agents, like animals or brains) resist a natural tendency to disorder." Note that early ML researchers also developed an analogous formalism using a free-energy like objective function for artificial neural networks (Hinton and Zemel, 1993), present in autoencoder networks which progeny is mostly responsible for the success of today's Large Language Models.

The FEP model entails a specific Bayesian process of minimization of free energy labeled as active inference and comes complete with a mapping of its modules to designated cortical areas (Friston, 2016): ventral and dorsal prefrontal cortex (evaluation of policies and Bayesian model average of next state), striatum and VTA/SN (policy selection and precision), hippocampus (state estimation), cerebellum (habit learning) and occipital cortex for sensory input. (The anatomical diagram in Friston's presentation at the 2016 CCN Workshop on Predictive Coding is stunningly reminiscent of the Fig. 2.)

These models, while inheriting their similarity from being distinct applications of the same control theory, may vary in mathematical formulations and overall behaviors. In the NCA, as in predictive processing, perception is the process of identifying the perceptual hypothesis that best predicts sensory input and hence, minimizes prediction error. At the time of writing, the question of whether the NCA model (with its six interacting modules: configurator, perception, world model, actor, cost and short-term memory) may map to identified cortical areas and can compare to the feed-forward models in accommodating neurophysiological and anatomical observations, which have established the latter as the orthodox perspective on sensory processing (Walsh et al., 2020) is still debated.

A Note on emerging symbols

Another opportunity to revisit the questions of symbols in the connectionist approach comes from many recent results in DL. In a famous paper (Smolensky, 1988) translated the strict empiricism above into three hypotheses:

- Connectionist Dynamical System Hypothesis. The state of the ``processor" at any moment is precisely defined by a vector of numerical values, one for each unit (artificial neuron). The dynamics is governed by a differential equation which parameters are the ``program" or ``knowledge".

- Sub-conceptual Unit Hypothesis. Semantics of conscious concepts (at the symbol level) are complex patterns of many units.

- Sub-conceptual Level Hypothesis. Complete, formal, and precise descriptions of the ``process" are generally tractable not at the conceptual level (the symbol level) but only at the sub-conceptual level.

\noindent and offered this summary as a cornerstone of the subsymbolic paradigm:

- The Subsymbolic Hypothesis. The process is a sub-conceptual connectionist dynamical system that does not admit a complete, formal, and precise conceptual-level description.

The explicit incompatibility of the symbolic and the subsymbolic paradigms in this conservative early formulation is certainly challenged by the intervening progresses of DL. Autoencoders (Hinton and Zemel, 1993) used in dimensional reduction became widely used for learning generative models of data. Today neural nets can predict missing links in a multi-relational database, or in a knowledge graph (Minervini et al., 2017), using adversarial training; they can ``understand" logical formulae well enough to detect entailment (Evans et al., 2018). These results unquestionably represent a step towards the ability to capture hierarchical, complex structures present in the input, an ability that might be important for many language, reasoning and planning tasks.

While one could read in the success of current LLMs the refutation of the impossibility for neural networks to demonstrate compositionality (Drozdov et al., 2022), a traditional argument overused by the early critics of connectionism (Fodor and Pylyshyn, 1988, van Gelder, 1990), Their symbolic interpretation remains evasive and seems to confirm Smolensky's axiom of 1988. This is a developing area of research with such methods as reverse engineering neural networks in mechanistic interpretability (Olah, 2022) and prompt engineering in chain-of-thought or tree-of-thought (Wei et al., 2023, Yao et al., 2023).

The current criticism of DL systems and LLMs revolves around the themes of reliability and trust. As the narrative goes, this new generation of ML systems is poised to invade many, if not all, sectors of human activities and mediate all exchanges–in the near, remote or farther future, according to the prophecy. Whether stemming from concerns with the acceptability or the defense against such a scenario, increasing the reliability and suffusing trust are often seen as the most urgent requirements to be forced onto ML systems having generated in a short period vast volumes of regulatory and legal literature (Jobin et al., 2019). The NCA somehow circumvents the apparent obstacle by localizing symbolic behavior, however sub-symbolically dispersed into the optimal distribution of weights of a neural network, into a set of few modules with assigned functions (``Critic" and ``Intrinsic cost", ``Short-term memory", ``Configurator", etc.). Notwithstanding the Subsymbolic Hypothesis, the NCA then stands its ground when facing the two nagging issues raised by the explainability in the wild (Vilone and Longo, 2021): What is to be explained and to whom? (Robbins, 2019) Can ML systems be rationalized? (Russell, 1997)

Conclusion

The cursory reading of the ``Brain" that Le Cun describes in his important 2022 paper (Le Cun, 2022), because it displays apparent conflation of a rejuvenated, dusted-off Symbolic AI with the deep-rooted belief in the universality of gradient descent, might seem unsettling. Reviewing this new cognitive architecture from the viewpoints of cognitive psychology and neuroscience, however, suggests that far from halfheartedly compromising between past postures in the AI debate it seeks to inspire explorations into yet uncharted territories. The proposed architecture of cognition is a model of the computational cognitive theory (Cummins, 1991) which operates metaphorically at several levels of abstraction.

- By challenging the current AI doxa, the result of successive renunciations from the original ambitions over decades of successive AI winters and half-victory springs, the model returns bravely to the lost amplitude of the Strong AI research program, but from the vantage point of the recent explosive success of ML. The first station being autonomous agents which typically interacts with and adapts to their environment (Holland, 1975, Chelsea Finn et al., 2017).

- The model avoids the conundrums of functionalism (Putnam, 1991) and radical connectionism, where insisting on the distinction between objects of computation and objects of interpretation always ends up in denying any direct explanatory relevance to computation. By including both, it serves two purposes. First: it skips the recurring question of the seat of symbols in connectionist systems, which were at times irrelevant and at others emergent or ``supervenient", as artifacts of computations over levels of activation and connection strengths (Smolensky, 1988). (Supervenience comes in localist flavor, where semantic interpretations are assigned to some individual nodes (Rumelhart and McClelland, 1982, McClelland and Rumelhart, 1981), or in distributed flavor where they are only assigned to network states (Hinton et al., 1986).) Second: it breaks from the prevalent view that problem-solving is the exclusive mark of the cognitive. Acknowledging that mental models are not enough, as we saw above, it embeds inner world-models into the activity of the agent.

- The key insight of the model is that, in the broader view of AI analyzed above, interaction and adaptation are tasks that bridge the differences between problem-solving and serving real-world real-time action. In the symbolic AI, problem-solving approach, the brain takes in data, performs a complex computation that solves the problem (catching a ball, say) and then instructs the body where to go. This is a linear processing cycle: perceive, compute and act (Ernst and Newell, 1969). In the second approach, the ``problem", it is even posited as such, is not solved ahead of time. Instead, the task is to maintain, by multiple, real-time adjustments to the run, a kind of co-ordination between the inner and the outer worlds. Such co-ordination dynamics constitute something of a challenge to traditional ideas about perception and action: they replace the notion of rich internal representations and computations, with the notion of likely less expensive strategies whose task is not first to represent the world and then reason on the basis of the representation, but instead to maintain a kind of adaptively potent equilibrium that couples the agent and the environment together (Clark, 1999).

- The key ingredients of the model rest in the innovative design of this bridge. On the one hand, the model treats inner reasoning and planning as something like simulated sensing and acting, thus preserving a special flavor of problem-solving alongside a high degree of ability to decouple from the environment. On the other, as understanding the complex interplay of brain, body and environment requires new analytic tools and methods from dynamical systems theory, the model relies uniformly and single-handedly on gradient-based methods sourced in Optimal Control Theory.

In closing we should now ask two, related questions. How different is this account from prevalent classical architectures (Newell, 1990, Anderson, 1995, Haugeland, 1981, Rumelhart et al., 1986)? And will it work for all kinds of planning and reasoning or only some? The first question leads apparently to a mild dilemma. For the simulation-based account looks most clearly different from classical accounts involving mental models only insofar as it treats reasoning and planning as, quite literally, imagined interactions. What remains unclear, however, is the scope of this cognitive architecture. Rather, it is hard to see how sensorimotor simulation could in principle account for all the kinds of thought, planning and reasoning that a problem, once successfully construed, demands. Simulated acting and sensing may well play a role–and perhaps even an essential role–in reasoning. But what about the capacity to examine arguments, to judge what follows from what, and to couch the issues in the highly abstract terms of a fundamental moral debate (e.g. using abstractions like ``liability", ``reasonable expectation", ``acceptable risk", etc.)? How can such abstractions be brought to a differentiable manifold under appropriate conditions for gradient methods to be applicable and to display good convergence characteristics? In the model though (and this is the mild dilemma) it seems that the more decoupled and abstract the world-model becomes, either the less applicable the sensorimotor simulation strategy is, or the less clearly its interpretation can then be differentiated from the more classical approaches it seeks to displace.

Truly inspiring questions for further research.

References

Anderson, John Robert (1995). The Architecture of Cognition, Lawrence Erlbaum Assos. Publ.

Baratgin, Jean; Douven, Igor; Evans, Jonathan St.B. T.; Oaksford, Mike; Over, David; Politzer, Guy (2015). The new paradigm and mental models., Elsevier Science.

Barsalou, Lawrence (2008). Grounded Cognition, Annual review of psychology.

Barwise, Jon (1987). Unburdening the Language of Thought, Mind and Language.

Broyd, Samantha J.; Demanuele, Charmaine; Debener, Stefan; Helps, Suzannah; James, Christopher J.; Sonuga-Barke, Edmund J.S. (2009). Default-mode brain dysfunction in mental disorders: A systematic review, Neuroscience and Biobehavioral Reviews.

Bryson, A. E.; Ho, Y. C. (1969). Applied Optimal Control, Blaisdell.

Buckner, R. L.; DiNicola, L. M. (2019). The brain's default network: updated anatomy, physiology and evolving insights, Nat Rev Neurosci.

Chauvet, Jean-Marie (2018). The 30-Year Cycle In The AI Debate, CoRR :http://arxiv.org/abs/1810.04053.

Chelsea Finn; Pieter Abbeel; Sergey Levine (2017). Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks.

Clark, Andy (1999). An Embodied Cognitive Science?, Trends in Cognitive Sciences.

Cole, Michael W.; Schneider, Walter (2007). The cognitive control network: Integrated cortical regions with dissociable functions, NeuroImage.

Cooper, Richard (2010). Forward and Inverse Models in Motor Control and Cognitive Control, Proceedings of the International Symposium on AI Inspired Biology - A Symposium at the AISB 2010 Convention.

Craik, K.J.W. (1943). The Nature of Explanation, Cambridge University Press.

Cummins, Robert (1991). Meaning and Mental Representation, The MIT Press.

Drozdov, Andrew; Schärli, Nathanael; Akyürek, Ekin; Scales, Nathan; Song, Xinying; Chen, Xinyun; Bousquet, Olivier; Zhou Denny (2022). Compositional Semantic Parsing with Large Language Models.

Duncan, John (2013). The Structure of Cognition: Attentional Episodes in Mind and Brain, Elsevier.

Duncan, John (2010). The multiple-demand (MD) system of the primate brain: mental programs for intelligent behaviour, Trends in Cognitive Sciences :https://www.sciencedirect.com/science/article/pii/S1364661310000057.

Duncan, John; Assem, Moataz; Shashidhara, Sneha (2020). Integrated intelligence from distributed brain activity., Elsevier Science.

Ernst, George W.; Newell, Allen (1969). GPS : A Case Study in Generality and Problem Solving, New York (N.Y.) : Academic press.

Evans, Richard; Saxton, David; Amos, David; Kohli, Pushmeet; Grefenstette, Edward (2018). Can Neural Networks Understand Logical Entailment.

Feiten, Tim Elmo (2020). Mind After Uexküll: A Foray Into the Worlds of Ecological Psychologists and Enactivists, Frontiers in Psychology.

Flusberg, Stephen J.; McClelland, James L. (2017). Connectionism and the Emergence of Mind, Oxford University Press.

Fodor, Jerry A; Pylyshyn, Zenon W (1988). Connectionism and cognitive architecture: A critical analysis, Elsevier.

Friston, Karl (2010). The Free-Energy Principle: A Unified Brain Theory?, Nature Publishing Group.

Friston, Karl (2016). Predictive Coding, Active Inference and Belief Propagation, 2016 CCN Workshop: Predictive Coding :https://www.youtube.com/watch?v=b1hEc6vay_k.

Fultot, Martin; Turvey, Michael T (2019). Von Uexküll's theory of meaning and Gibson's organism–environment reciprocity, Taylor and Francis.

Gerstenberg, Tobias; Tenenbaum, Joshua B. (2017). Intuitive Theories, Oxford University Press.

Gibson, James J (2014). The Ecological Approach To Visual Perception, Psychology Press.

Haugeland, John (1981). Mind Design: Philosophy, Psychology, and Artificial Intelligence, The MIT Press.

Heider, Fritz; Simmel, Marianne (1944). An Experimental Study of Apparent Behavior, University of Illinois Press.

Hinton, G. E.; McClelland, J. L.; Rumelhart, D. E. (1986). Distributed Representations, MIT Press.

Hinton, Geoffrey E.; Zemel, Richard S. (1993). Autoencoders, Minimum Description Length and Helmholtz Free Energy.

Holland, John H. (1975). Adaptation in Natural and Artificial Systems, University of Michigan Press.

Hunt, Earl (1989). Connectionist and rule-based representations of expert knowledge, Behavior Research Methods, Instruments, and Computers.

Imamizu, Hiroshi; Kawato, Mitsuo (2009). Brain mechanisms for predictive control by switching internal models: implications for higher-order cognitive functions, Psychological Research PRPF.

Jobin, Anna; Ienca, Marcello; Vayena, Effy (2019). The global landscape of AI ethics guidelines, Nature Machine Intelligence.

Johnson-Laird, P. N. (1983). Mental Models: Towards a Cognitive Science of Language, Inference, and Consciousness, Harvard University Press.

Johnson-Laird, P. N. (1980). Mental Models in Cognitive Science, Elsevier Science.

Johnson-Laird, Philip N. (2010). Mental Models and Human Reasoning, Proceedings of the National Academy of Sciences.

Johnson-Laird, Philip Nicholas; Byrne, Ruth M. J. (1991). Deduction., Lawrence Erlbaum Associates, Inc.

Kahneman, Daniel (2011). Thinking, fast and slow, Farrar, Straus and Giroux.

Kirk, Donald E. (2012). Optimal Control Theory: An Introduction, Dover Publications.

Lakoff, George (1987). Women, Fire, and Dangerous Things, University of Chicago Press.

Le Cun, Yann (2022). A path towards autonomous machine intelligence version 0.9. 2, 2022-06-27, Open Review :https://openreview.net/pdf?id=BZ5a1r-kVsf.

Le Cun, Yann (2019). Quand la machine apprend, Editions Odile Jacob.

Lillicrap, Timothy; Santoro, Adam; Marris, Luke; Akerman, Colin; Hinton, Geoffrey (2020). Backpropagation and the brain, Nature Reviews Neuroscience.

Marcus, Gary; Davis, Ernest (2019). Rebooting Ai: Building Artificial Intelligence We Can Trust, Vintage.

McClelland, James L.; Rumelhart, David E. (1981). An interactive activation model of context effects in letter perception: I. An account of basic findings., American Psychological Association.

Minervini, Pasquale; Demeester, Thomas; Rocktäschel, Tim; Riedel, Sebastian (2017). Adversarial Sets for Regularising Neural Link Predictors, AUAI Press.

Minsky, Marvin; Papert, Seymour (1969). Perceptrons: An Introduction to Computational Geometry, MIT Press.

Newell, Allen (1990). Unified Theories of Cognition, Harvard University Press.

Olah, Chris (2022). Mechanistic Interpretability, Variables, and the Importance of Interpretable Bases. , :https://www.transformer-circuits.pub/2022/mech-interp-essay.

Putnam, Hilary (1981). Reason, truth and history, Cambridge University Press.

Putnam, Hilary (1991). Representation and Reality, The MIT Press.

Raja, Vicente; Valluri, Dinesh; Baggs, Edward; Chemero, Anthony; Anderson, Michael L. (2021). The Markov blanket trick: On the scope of the free energy principle and active inference, Physics of Life Reviews.

Ramstead, Maxwell J.D. (2022). One person's modus ponens…, Elsevier .

Rapaport, William J. (1991). The Inner Mind and the Outer World: Guest Editor's Introduction to a Special Issue on Cognitive Science and Artificial Intelligence, Wiley.

Robbins, Scott (2019). A Misdirected Principle with a Catch: Explicability for AI, Minds and Machines.

Rumelhart, David E.; McClelland, James L. (1982). An interactive activation model of context effects in letter perception: II. The contextual enhancement effect and some tests and extensions of the model., American Psychological Association.

Rumelhart, David E.; McClelland, James L.; PDP Research Group (1986). Parallel Distributed Processing: Explorations in the Microstructure of Cognition, The MIT Press.

Russell, Stuart J. (1997). Rationality and intelligence, Artificial Intelligence :https://www.sciencedirect.com/science/article/pii/S000437029700026X.

Sacerdoti, Earl D. (1977). A Structure for Plans and Behavior, Elsevier.

Schmidhüber, J. (1990). Making the World Differentiable: On Using Fully Recurrent Self-Supervised Neural Networks for Dynamic Reinforcement Learning and Planning in Non-Stationary Environments, Institut fr Informatik, Technische Universitt Mnchen :https://people.idsia.ch/~juergen/FKI-126-90_(revised)bw_ocr.pdf.

Shulman, Gordon; Fiez, Julie; Corbetta, Maurizio; Buckner, Randy; Miezin, Fran; Raichle, Marus; Petersen, Steve (1997). Common Blood Flow Changes across Visual Tasks: II. Decreases in Cerebral Cortex, Journal of cognitive neuroscience.

Sloman, Steven (2007). Causal Models: How People Think about the World and Its Alternatives, Causal Models: How People Think about the World and Its Alternatives.

Smolensky, Paul (1988). On the proper treatment of connectionism, Cambridge University Press.

Smolensky, Paul (1986). Information Processing in Dynamical Systems: Foundations of Harmony Theory, The MIT Press.

Suchman, Lucy A. (1987). Plans and situated actions: The problem of human-machine communication., Cambridge University Press.

Surace, Simone Carlo; Pfister, Jean-Pascal; Gerstner, Wulfram; Brea, Johanni (2020). On the choice of metric in gradient-based theories of brain function, Public Library of Science.

Uexküll, Jakob von; Gross, Felix; others (1913). Bausteine zu einer biologischen Weltanschauung, F. Bruckmann.

Valmeekam, Karthik; Olmo, Alberto; Sreedharan, Sarath; Kambhampati, Subbarao (2022). Large Language Models Still Can't Plan (A Benchmark for LLMs on Planning and Reasoning about Change).

van Gelder, Tim (1990). Compositionality: A Connectionist Variation on a Classical Theme, Cognitive Science.

Vilone, Giulia; Longo, Luca (2021). Notions of explainability and evaluation approaches for explainable artificial intelligence, Information Fusion :https://www.sciencedirect.com/science/article/pii/S1566253521001093.

Walsh, Kevin S; McGovern, David P; Clark, Andy; O'Connell, Redmond G (2020). Evaluating the neurophysiological evidence for predictive processing as a model of perception, Annals of the New York Academy of Sciences.

Wei, Jason; Wang, Xuezhi; Schuurmans, Dale; Bosma, Maarten; Ichter, Brian; Xia, Fei; Chi, Ed; Le, Quoc; Zhou, Denny (2023). Chain-of-Thought Prompting Elicits Reasoning in Large Language Models, :https://arxiv.org/abs/2201.11903.

Yao, Shunyu; Yu, Dian; Zhao, Jeffrey; Shafran, Izhak; Griffiths, Thomas; Cao, Yuan; Narasimhan, Karthik (2023). Tree of Thoughts: Deliberate Problem Solving with Large Language Models, :https://arxiv.org/abs/2305.10601.

Zador, Anthony; Escola, Sean; Richards, Blake; Ölveczky, Bence; Bengio, Yoshua; Boahen, Kwabena; Botvinick, Matthew; Chklovskii, Dmitri; Churchland, Anne; Clopath, Claudia; DiCarlo, James; Ganguli, Surya; Hawkins, Jeff; KÖrding, Konrad; Koulakov, Alexei; Le Cun, Yann; Lillicrap, Timothy; Marblestone, Adam; Olshausen, Bruno; Pouget, Alexandre; Savin, Cristina; Sejnowski, Terrence; Simoncelli, Eero; Solla, Sara; Sussillo, David; Tolias, Andreas S.; Tsao, Doris (2023). Catalyzing next-generation Artificial Intelligence through NeuroAI, Nature Communications :https://doi.org/10.1038/s41467-023-37180-x.

The content of this page by CRT_and_KDU is licensed under a Creative Commons Attribution-Attribution-ShareAlike 4.0 International.